Daily briefing: ‘Mind captioning’ AI describes the images in your head

Summary

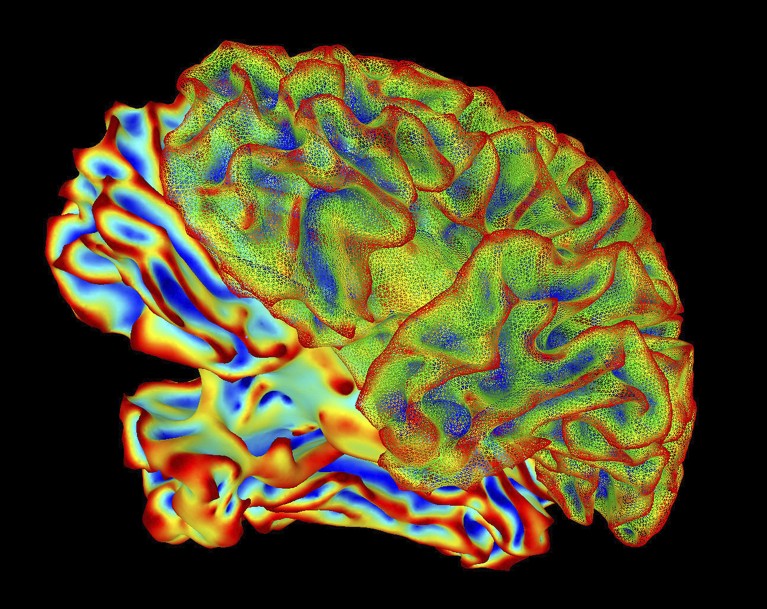

Nature’s daily briefing highlights a striking AI advance — a technique dubbed “mind captioning” that translates fMRI brain scans into descriptive sentences of what a person is seeing or imagining. The method pairs brain-activity data with text captions from thousands of videos to train models that map neural patterns to language. Researchers say the approach could aid people with speech or language difficulties, but it raises serious mental-privacy and ethical concerns as the technology edges closer to reading internal imagery.

The briefing also summarises other items: DNA analysis clears Clarion Island’s spiny-tailed iguanas of being human-introduced invaders; a major UK study finds cardiovascular risks in children are higher after COVID-19 infection than after vaccination; and a feature explores the global ‘shadow scholars’ who write academic work for others.

Key Points

- “Mind captioning” uses AI trained on video captions plus fMRI scans to generate sentences describing images in a person’s mind.

- The technique links neural activity patterns to linguistic descriptions by learning from large-scale video–caption datasets and matched brain scans.

- Potential clinical benefit: could offer a communication aid for people with severe language impairments or locked-in conditions.

- Major ethical worry: increasing risk to mental privacy — the tech could, in theory, reveal intimate or private thoughts if misapplied.

- Clarion Island iguanas were likely native — mitochondrial DNA suggests they split from mainland relatives ~426,000 years ago, averting planned extermination.

- A large England study shows rare vascular and inflammatory risks are lower after COVID-19 vaccination than after infection in children (Pfizer-BioNTech focused).

- Feature: ‘Shadow scholars’ documentary exposes contract-cheating networks where highly capable writers in poorer countries produce academic work for wealthier clients.

Context and relevance

The mind-captioning item sits at the intersection of AI, neuroscience and ethics. It demonstrates how multimodal AI (linking vision, language and brain data) is moving from proof-of-concept to plausible real-world use. For researchers and policymakers this is a red flag: clinical promise exists, but so do urgent questions about consent, data governance and the potential for misuse.

For a broader audience, the briefing packages several short pieces that matter: conservation policy (iguanas), public-health evidence on vaccination risk trade-offs, and the global economics of academic labour. Together they reflect ongoing trends — AI accelerating into sensitive domains, genomic evidence reshaping conservation choices, and persistent inequalities in academic work.

Why should I read this

Because it’s part fascinating and part worrying — AI that can “caption” what’s in your head sounds like sci‑fi but it’s happening. If you care about where tech meets ethics, or want a quick roundup of other notable science stories (iguanas spared, vaccine vs infection risks, and the shadow-essay industry), this saves you time and flags what to look into next.