Glasses-free 3D display with ultrawide viewing range using deep learning

Article metadata

Article Date: 26 November 2025

Article URL: https://www.nature.com/articles/s41586-025-09752-y

Image: Figure 1

Summary

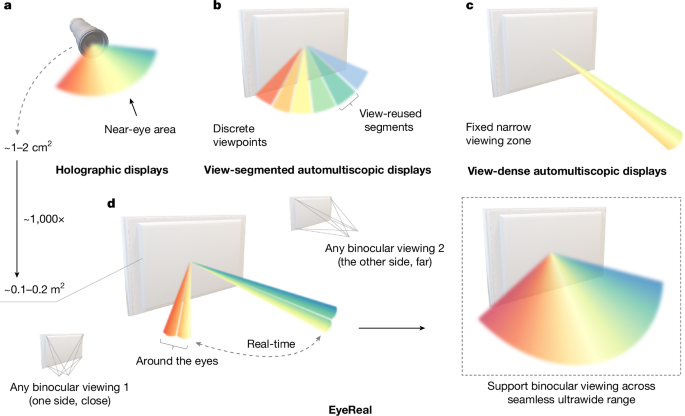

This Nature paper introduces EyeReal, a computational approach that combines physically accurate binocular modelling with deep learning to produce a glasses-free (autostereoscopic) 3D display at desktop scale. Rather than trying to massively increase hardware space–bandwidth product (SBP), EyeReal dynamically reallocates the limited SBP in real time to the region around viewers’ eyes, synthesising physically consistent light fields for binocular viewpoints and their neighbourhoods. The method runs on consumer-grade multilayer LCD stacks (no bespoke optics), delivers full-parallax cues (stereo, motion and focal parallax), operates at ~50 Hz with 1,920 × 1,080 output, and supports an ultrawide viewing range (well beyond 100°) in prototype demonstrations. The team provides datasets and code on GitHub and validates performance across synthetic and real scenes, showing improved view consistency, focal discrimination and real-time speed versus existing view-segmented or view-dense methods.

Key Points

- EyeReal dynamically optimises scarce optical SBP around the eyes in real time, instead of statically partitioning resources across the whole display.

- The system fuses a physically accurate binocular (ocular) geometric model with a lightweight convolutional neural network (ocular geometric encoding) to compute layered phase patterns for multilayer LCD stacks.

- Prototype runs at ~50 frames per second at 1,920 × 1,080 on consumer hardware (NVIDIA RTX 4090) and uses only stacked LCD panels plus polarizers and a white LED backlight—no specialised holographic SLMs or lens arrays required.

- Delivers full-parallax output (stereo, motion and focal cues), mitigating vergence–accommodation conflict (VAC) and interpupillary-distance (IPD) mismatches common in other autostereoscopic systems.

- Demonstrated ultrawide, seamless viewing well beyond 100° with stable omnidirectional transitions across horizontal, vertical and radial motion trajectories.

- Outperforms representative iterative view-dense and view-segmented methods in spatial consistency and runs one to two orders of magnitude faster in sub-second runtimes.

- The authors trained on a large, custom light-field dataset (object- and scene-level captures) and publish code/data at GitHub for reproducibility.

- The approach is compatible with practical improvements (field-sequential colour, mini-LED backlighting, time multiplexing) and could extend toward multi-user setups with additional multiplexing strategies.

Why should I read this?

Short version: if you care about the future of screens, XR or practical holography, this is neat — EyeReal shows software and smart optics modelling can do the heavy lifting, letting ordinary LCD hardware deliver genuinely wide‑angle, glasses‑free 3D. It’s an accessible, well‑validated step toward usable autostereoscopic displays without exotic hardware. Read it for a realistic view of how AI+physics can unblock long-standing display limits.

Author style

Punchy — this is not incremental. The paper reframes the core limitation (SBP) and presents a practical, implementable strategy that substantially narrows the gap between lab-scale holography and consumer displays. If you work on displays, XR, neural rendering or optical systems, the details are worth your time: the results are both technically solid and directly applicable.

Context and relevance

Autostereoscopic displays have historically been forced into trade-offs between viewing angle, display size and parallax completeness because of limited SBP. EyeReal sidesteps brute-force hardware scaling by reallocating optical information where the eyes need it most, leveraging a learned phase-decomposition method constrained by real binocular geometry. That shift — computationally steering limited optics in real time — aligns with broader industry trends: using AI to extend or replace complex hardware, enabling cheaper, more scalable XR and 3D display products. The paper therefore matters for manufacturers, XR platform developers and researchers exploring realistic, comfortable glasses-free 3D experiences.

Source

Source: https://www.nature.com/articles/s41586-025-09752-y

Notes

Code and dataset: https://github.com/WeijieMax/EyeReal. Prototype hardware: 3-layer LCD stack, Kinect V2 for eye localisation, single RTX 4090 for inference; further improvements (colour sequencing, mini‑LEDs) are discussed for deployment.