An AI for an AI: Anthropic says AI agents require AI defense

Summary

Anthropic’s security researchers have published a warning and a toolset showing that increasingly capable AI agents can discover and exploit vulnerabilities in blockchain smart contracts. They released SCONE-bench (Smart CONtracts Exploitation benchmark), a dataset of 405 exploited contracts drawn from DeFiHackLabs spanning Ethereum-compatible chains, to evaluate how well tool-enabled models find financial bugs.

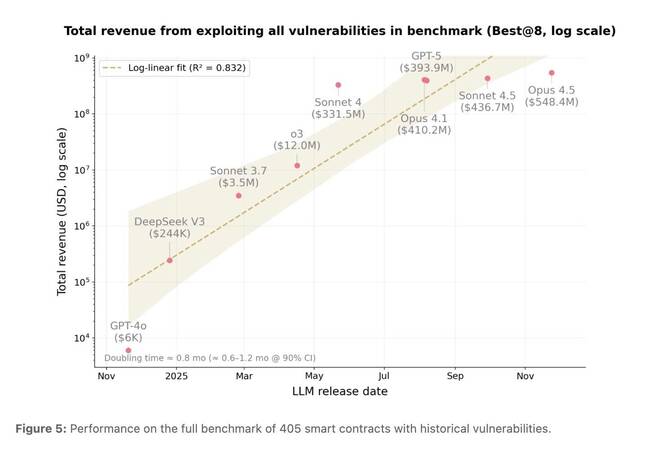

The researchers found that frontier models — including Anthropic’s Claude Opus 4.5 and Sonnet 4.5, plus OpenAI’s GPT-5 — could generate exploit code with simulated revenue totalling about $4.6m for contracts deployed after the models’ training cut-off. Tests across 2,849 recently deployed contracts (with no public disclosures) also yielded two zero-day faults and small but real exploit revenue. Anthropic argues the falling cost and rising effectiveness of AI-driven attacks means defenders need to adopt AI-based defences proactively.

Key Points

- Anthropic released SCONE-bench to measure how AI agents exploit smart contract flaws.

- SCONE-bench contains 405 exploited smart contracts from Ethereum, Binance Smart Chain and Base, sourced from DeFiHackLabs (2020–2025).

- Frontier models (Claude Opus 4.5, Sonnet 4.5, GPT-5) produced exploit code worth an estimated $4.6m on contracts introduced after the models’ training cut-off.

- When run on 2,849 recently deployed contracts with no public vulnerabilities, agents found two zero-day flaws and created exploits totalling $3,694.

- Anthropic reports low operational costs for attacks: average cost per agent run $1.22; average cost per vulnerable contract identified $1,738; average revenue per exploit $1,847; average net profit $109.

- Anthropic observed that simulated exploit revenue roughly doubled every 1.3 months over the last year, signalling rapidly improving offensive capability.

- The company concludes that AI must be used for defence as well as offence — i.e. deploy AI agents to detect and mitigate AI-enabled attacks.

Context and relevance

This story sits at the intersection of AI, cybersecurity and blockchain finance. It confirms research trends showing automated agents can increasingly perform complex offensive tasks cheaply and at scale. For anyone working in DeFi, smart contracts, platform security or threat intelligence, the findings underline a material shift: automated exploitation is becoming more affordable and practical, raising the stakes for proactive tooling, audit practices and rapid incident response.

Organisations relying on smart contracts or running public blockchain services should view this as an early warning: traditional testing and human-only red-teaming may not keep pace with agentic attackers. Anthropic’s push for AI-for-defence is positioned as a pragmatic response to that gap.

Author’s take

Punchy: this is not academic hand-wringing — it’s a demonstration that AI agents can and will turn code on-chain into cash unless defenders level up with AI-assisted security. Read the details if you care about stopping automated theft.

Why should I read this?

Look, if you deal with crypto, smart contracts or AI security, this is one of those headlines you can’t ignore. Anthropic shows attacks are getting cheaper and more automated — so either you start using AI to hunt bugs before attackers do, or you fund their next payday. We’ve done the slog so you don’t have to: the article flags the benchmarks, the data and the core numbers you need to assess risk fast.

Source

Source: https://go.theregister.com/feed/www.theregister.com/2025/12/05/an_ai_for_an_ai/