AI is rewriting how power flows through the datacenter

Summary

Datacentres are being redesigned to cope with huge power demands from AI accelerators. Rising rack densities and multi‑GPU systems (some GPUs drawing ~700W) are forcing changes from grid connection down to chip‑level power delivery. The article outlines developments including solid‑state transformers (SSTs) using silicon carbide, faster solid‑state circuit protection, moves to higher DC voltages (800VDC or +/-400V topologies), “power sidecar” racks for AC‑to‑DC and backup, and evolving power delivery at the board and chip level (back‑side vertical modules and eventual in‑package VRMs). Vendors such as Infineon expect power semiconductors (SiC, GaN) to become strategic components in datacentre builds, with potential new markets through to 2030.

Key Points

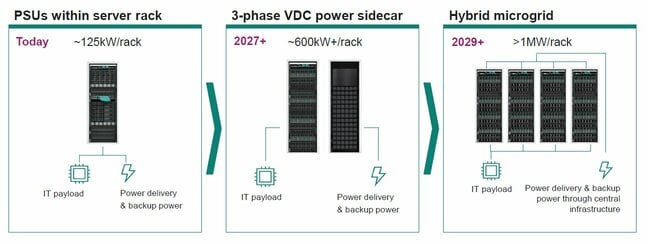

- AI accelerators are dramatically increasing rack power; some rack designs and appliances target hundreds of kW up to 1MW per rack cluster.

- Traditional transformers and electromechanical protection gear face long lead times and size limits; solid‑state alternatives (SSTs and SiC/GaN circuit breakers) reduce weight and switch far faster.

- Higher DC distribution (800VDC or +/-400V hybrid topologies) is being promoted to reduce conversion losses and support megawatt‑scale racks.

- “Power sidecars” (separate racks for power delivery and backup) will bridge current infrastructure to denser future racks, enabling 600kW+ rack configurations before full integration.

- PSU and VRM designs are shifting: larger three‑phase PSUs and moves from discrete VRMs to back‑side vertical modules (BVM), with in‑package VRMs planned beyond 2027 to cut parasitic losses.

- Power semiconductors (silicon carbide, gallium nitride) are central to these changes and could create a significant new market for suppliers by 2030.

Context and relevance

The surge in AI compute — and hyperscalers planning 1MW racks — is exposing electrical limits in datacentre design, supply chains and local distribution. This story matters to datacentre operators, equipment vendors, chipmakers and facilities engineers because it describes how power architectures will evolve to meet AI’s energy appetite and where investment and supply constraints are likely to appear. It also links to broader trends: efforts to lower PUE, reduce conversion losses, and avoid long lead times for heavy electromechanical kit.

Why should I read this?

Quick, punchy: if you buy, build, run or spec datacentres (or supply anything to them), this is the plumbing memo you didn’t know you needed. It explains why semiconductors are now as strategic as GPUs — and why your next budget should include more than just compute boxes. Saves you a few hours of digging through vendor blogs.

Author style

Punchy — the write‑up highlights urgency around supply chains, efficiency and architecture shifts. Where this is important, it makes that clear rather than burying the point: power semiconductors are becoming a datacentre bottleneck and an opportunity.

Source

Source: https://go.theregister.com/feed/www.theregister.com/2025/12/22/ai_power_datacenter/