Every conference is an AI conference as Nvidia unpacks its Vera Rubin CPUs and GPUs at CES

Summary

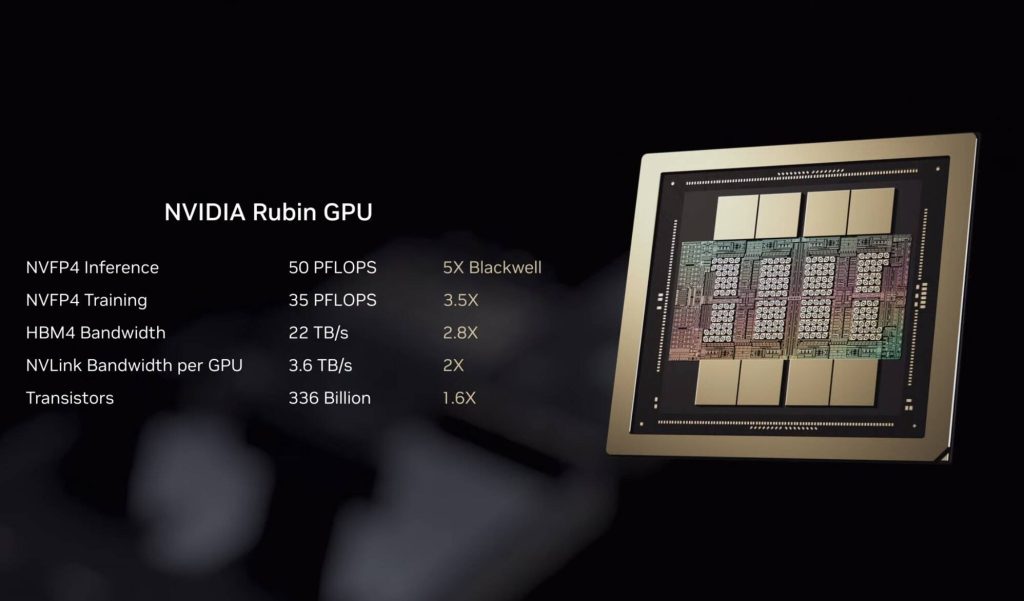

Nvidia used CES to preview its Vera Rubin platform — CPUs, GPUs and rack systems — ahead of the expected second-half-2026 shipment window. The Vera Rubin superchip (likely VR200) pairs two dual-die Rubin GPUs with a new 88-core Vera Arm CPU, high-bandwidth HBM4 memory, and a faster NvLink interconnect. Nvidia claims big generative-AI gains versus Blackwell: up to 5x higher FP performance for inference, 3.5x for training, and roughly 2.8x more memory bandwidth. The flagship NVL72 rack is refined for serviceability, telemetry and confidential computing across NvLink.

Key supporting pieces include Rubin CPX accelerators for LLM prefill, ConnectX-9 1.6Tbps superNICs, and BlueField-4 DPUs (800Gbps + 64-core Grace) for storage, security and offload. Nvidia also outlined an Inference Context Storage approach to offload large KV caches, aiming to keep GPUs generating tokens rather than waiting on data. AMD’s Helios racks remain competitive — especially on HBM capacity — so the infra race is heating up. Nvidia also teased Rubin Ultra / Kyber racks for 2027 that will demand much larger datacentre power budgets.

Key Points

- Vera Rubin superchip (expected VR200) combines two dual-die Rubin GPUs with a new 88-core Vera Arm CPU.

- Nvidia claims up to 5x inference and 3.5x training floating-point gains vs Blackwell, with 2.8x memory bandwidth (22 TB/s per socket).

- NVL72 racks contain 72 Rubin GPUs, 36 Vera CPUs and beefed-up LPDDR5x and HBM4 capacity and bandwidth; improved serviceability and telemetry are highlighted.

- Rubin CPX accelerators target the LLM prefill phase (30 petaFLOPS NVFP4, 128 GB GDDR7) to reduce bottlenecks.

- ConnectX-9 (1.6 Tbps) and BlueField-4 DPUs (800 Gbps NIC + 64-core Grace) are central to networking, storage offload and security.

- Inference Context Storage and KV cache offload work with BlueField-4 to keep GPUs focused on token generation rather than recomputation.

- AMD Helios remains a strong competitor with higher per-socket HBM4 capacity (432 GB vs Rubin’s 288 GB) and comparable FP performance on some workloads.

- Nvidia previewed Rubin Ultra / Kyber racks for 2027 (huge power demands — ~600 kW per rack expected) and Ultra GPUs with 1 TB HBM4e and 100 petaFLOPS FP4 per GPU.

Why should I read this?

Short version: if you care about buying, running or designing AI datacentre kit, this matters. Nvidia has shown the shape of the next-gen pieces — more speed, more bandwidth, new accelerators and infrastructure tricks to dodge inference bottlenecks. It’s not shipping yet, but the roadmap affects purchasing, capacity planning and power readiness now. We read it so you don’t have to — but you should skim the details if you’re in infra, AI ops or procurement.

Context and Relevance

This CES peek is less about an immediate product launch and more about signalling: vendors and customers buy roadmaps. Nvidia’s Rubin pushes the industry toward heavier memory bandwidth, tighter GPU-CPU fabric and larger offload-capable DPUs. That intensifies competition with AMD’s Helios and cloud providers’ custom stacks, and forces datacentre operators to rethink power, cooling and networking plans ahead of 2027’s Rubin Ultra racks. The Inference Context Storage approach also reflects a wider trend — shifting work off GPUs to specialised memory and DPUs to improve throughput for LLM inference at scale.

Source

Source: https://www.theregister.com/2026/01/05/ces_rubin_nvidia/