Every conference is an AI conference as Nvidia unpacks its Vera Rubin CPUs and GPUs at CES

Summary

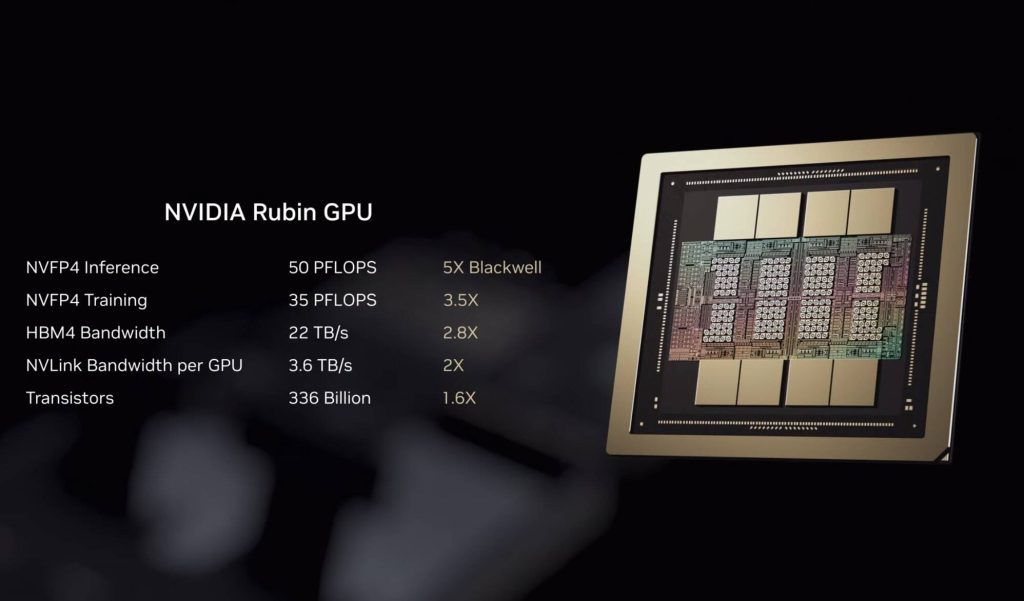

Nvidia used CES to preview its Vera Rubin platform — CPUs, GPUs and rack systems — emphasising AI-first hardware and software for datacentre and edge use. The NVL72 rack, built around the Vera Rubin superchip (two Rubin GPUs plus a Vera CPU), promises big gains over Blackwell: up to 5x inference FP performance, 3.5x training and 2.8x memory bandwidth increases. Key platform elements include Rubin GPUs with HBM4, Vera Arm-based CPUs, faster NvLink interconnects, BlueField-4 DPUs and the new ConnectX-9 “superNIC”.

Nvidia also highlighted specialised Rubin CPX accelerators for LLM prefill, an Inference Context Storage approach to offload KV caches, and software stacks for robotics and autonomous driving. Rubin is due in the second half of the year; what was revealed is refinement and system-level detail rather than an earlier ship date.

Key Points

- Vera Rubin NVL72 racks centre on a Vera Rubin superchip (two Rubin GPUs + Vera CPU), offering large FP4/FP training and inference gains versus Blackwell.

- Rubin GPUs pair 288 GB HBM4 per GPU (576 GB per superchip) with 22 TB/s per socket, and a faster NvLink-C2C (1.8 TB/s) between CPU and GPU.

- NVL72 configuration: 72 Rubin GPUs, 36 Vera CPUs, 20.7 TB HBM4, 54 TB LPDDR5x and doubled NvSwitch bandwidth vs last gen.

- Rubin CPX accelerators target LLM prefill workloads (30 petaFLOPS NVFP4, 128 GB GDDR7) to reduce memory-bandwidth pressure on HBM-equipped GPUs.

- BlueField-4 DPUs (with ConnectX-9 NIC and 64-core Grace CPU) plus Inference Context Storage aim to offload KV caches and reduce IO and compute stalls during inference.

- Nvidia teased Kyber/Rubin Ultra for 2027 with much higher power and scale (600 kW racks, Rubin Ultra GPUs and multi-exaFLOPS domains).

- AMD’s Helios racks remain a competitive alternative on paper (higher HBM4 capacity per socket), making real-world software and scaling behaviour crucial.

Context and relevance

Nvidia’s CES reveal is less about surprising ship dates and more about signalling system direction to enterprise buyers and datacentre operators. The emphasis on system-level engineering — serviceability, telemetry, network and DPU offload, and specialised accelerators — shows how AI workloads are reshaping hardware design. Organisations planning procurement, capacity, power and cooling should take note: rack power density, memory bandwidth and inference memory strategies are now central to architecture choices.

Why should I read this?

Quick take: if you care about where AI infrastructure is actually heading (not just flashy model demos), this is worth a skim. Nvidia’s CES update spells out the real trade-offs — memory bandwidth vs capacity, specialised prefill chips vs HBM-equipped GPUs, and the networking/DPU glue that makes huge clusters behave. Saves you poking through long press releases and datasheets while you decide whether to refresh kit or pencil in datacentre upgrades.

Source

Source: https://go.theregister.com/feed/www.theregister.com/2026/01/05/ces_rubin_nvidia/